7 Data analysis in the current era

So far we have reviewed numerous techniques to collect spatial transcriptomics data. In this chapter, we review computational methods to analyze data generated by current era techniques and methods that, while only having WMISH, FISH, or ISH as spatial data, involve scRNA-seq data as well. For a publication to be included in the “Analysis” sheet of this database, it must either focus on a data analysis method, or present alongside new data, sophisticated data analysis going beyond using existing packages. While some data analysis methods originally not designed for spatial data can be used for spatial data, this chapter is about methods designed specifically with spatial data in mind. This means that the methods should be demonstrated on a spatial transcriptomic dataset in the publication, even if not explicitly using spatial coordinates.

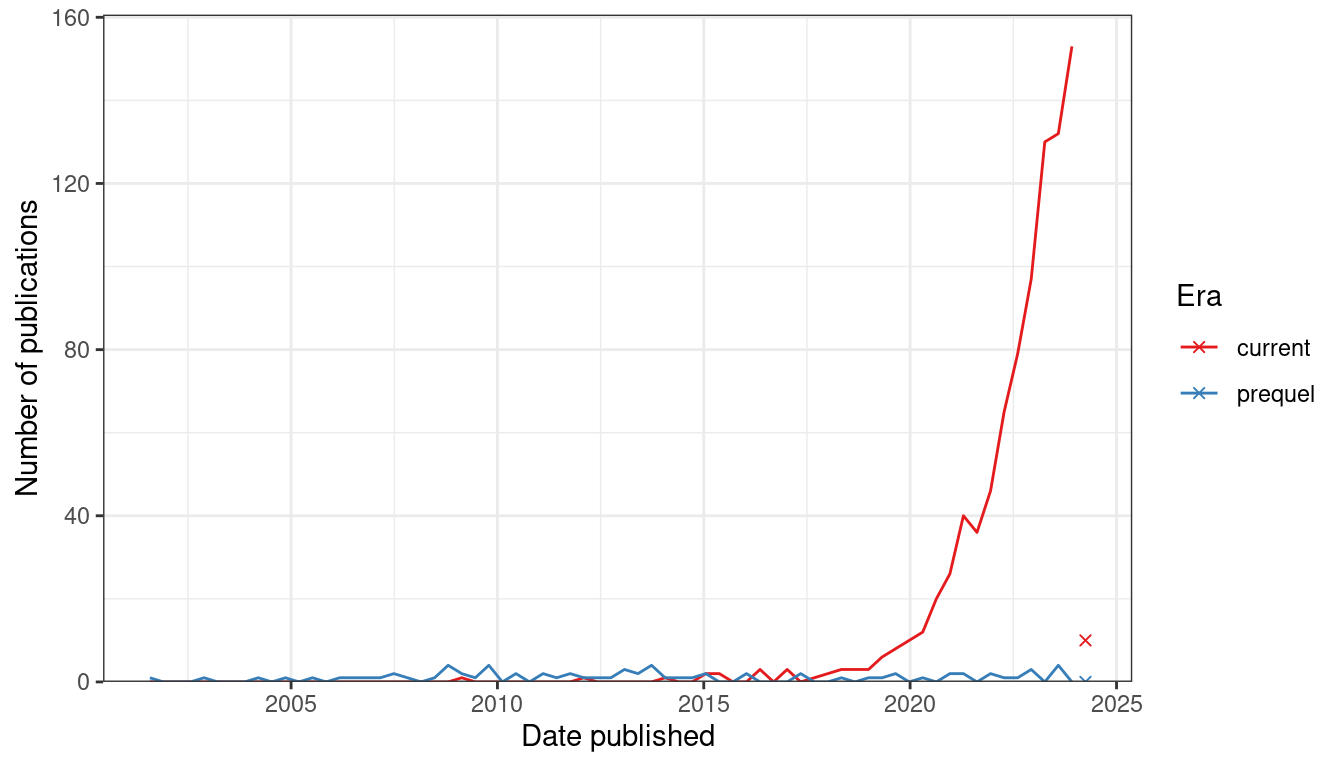

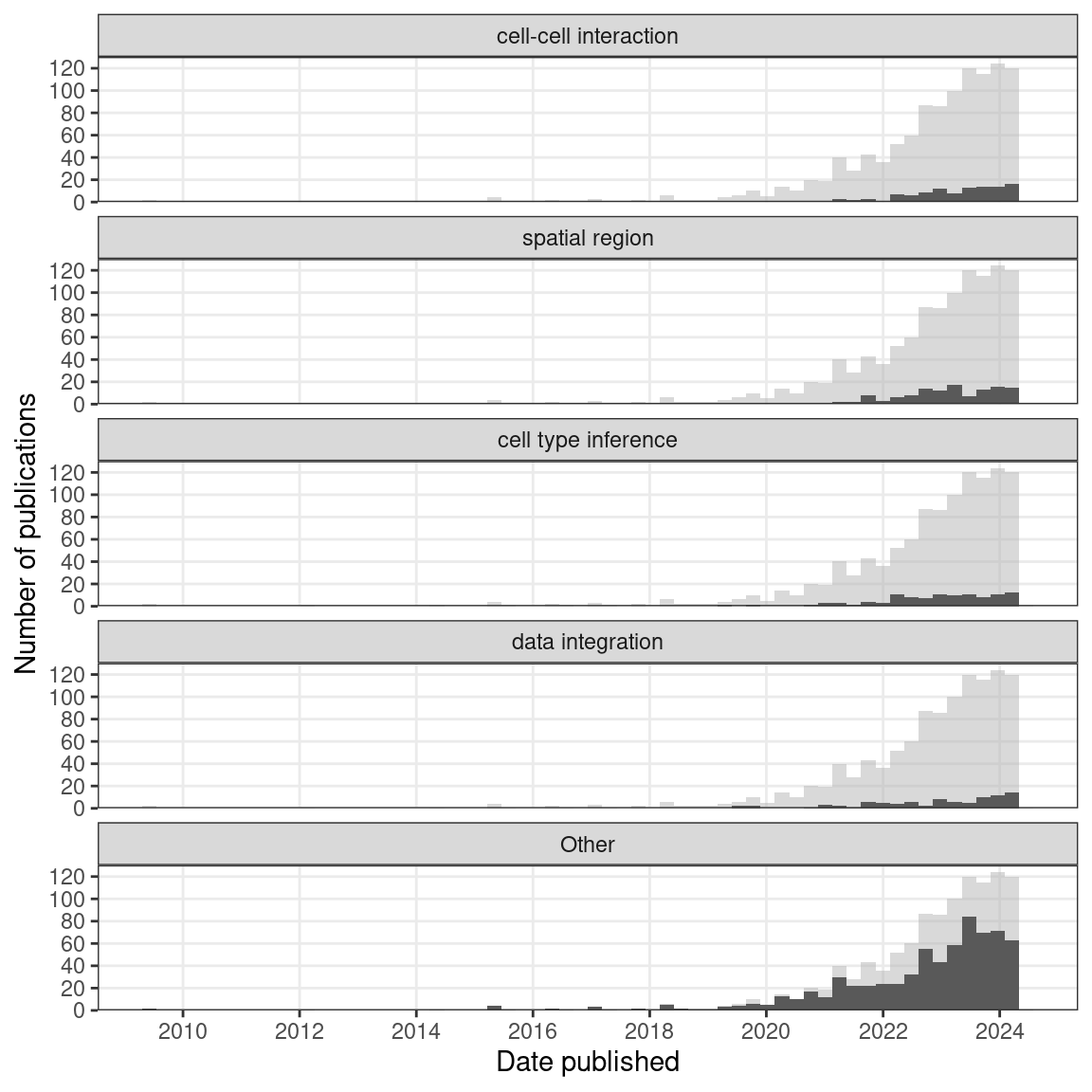

Figure 7.1: Number of publications over time for current era and prequel data analysis. Bin width is 120 days. Preprints are included for this figure. The x-shaped points show the number of publications from the last bin, which is not yet full.

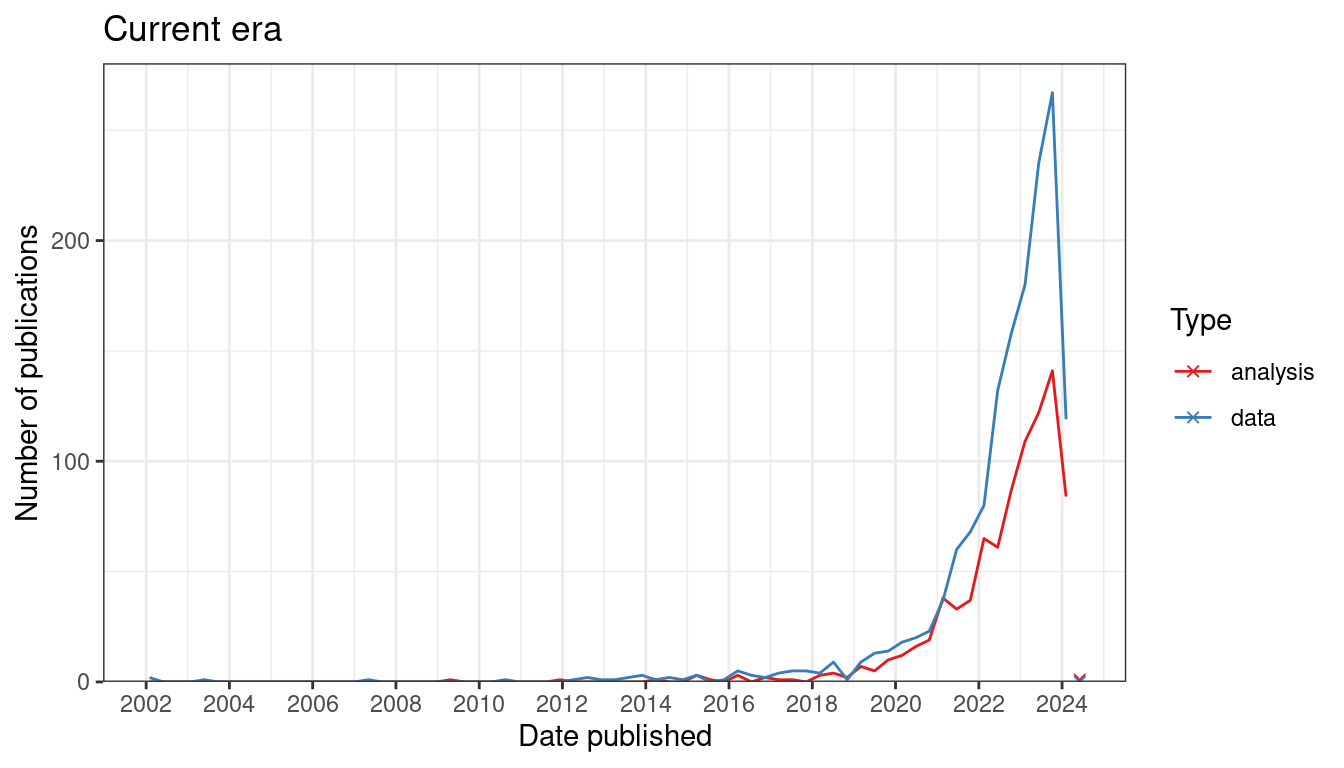

Since 2019, there has been a sharp increase in interest in current era data analysis (Figure 7.2). If our collection of prequel data analysis literature is somewhat representative and complete, then interest in current era data analysis dwarfs the golden age of prequel data analysis from 2008 to 2014 (Figure 7.1). As already shown, interests in current era data collection increased sharply since 2018 (Figure 4.2, Figure 7.2), although not as sharply as in data collection (Figure 7.2).

Figure 7.2: Number of publication over time for current era data collection and data analysis. Bin width is 120 days. The x-shaped points show the number of publications from the last bin, which is not yet full.

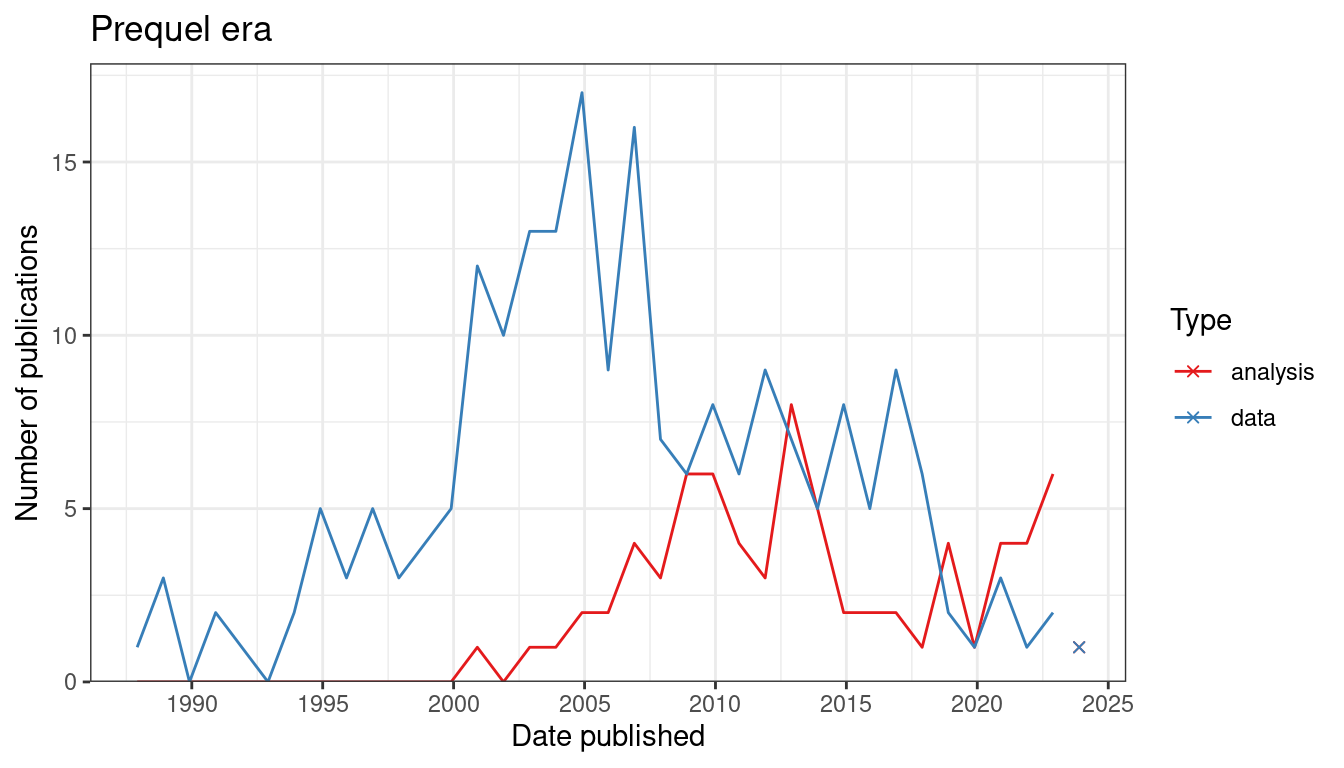

Figure 7.3: Number of publications over time for prequel data collection and data analysis. Bin width is 365 days. The x-shaped points show the number of publications from the last bin, which is not yet full.

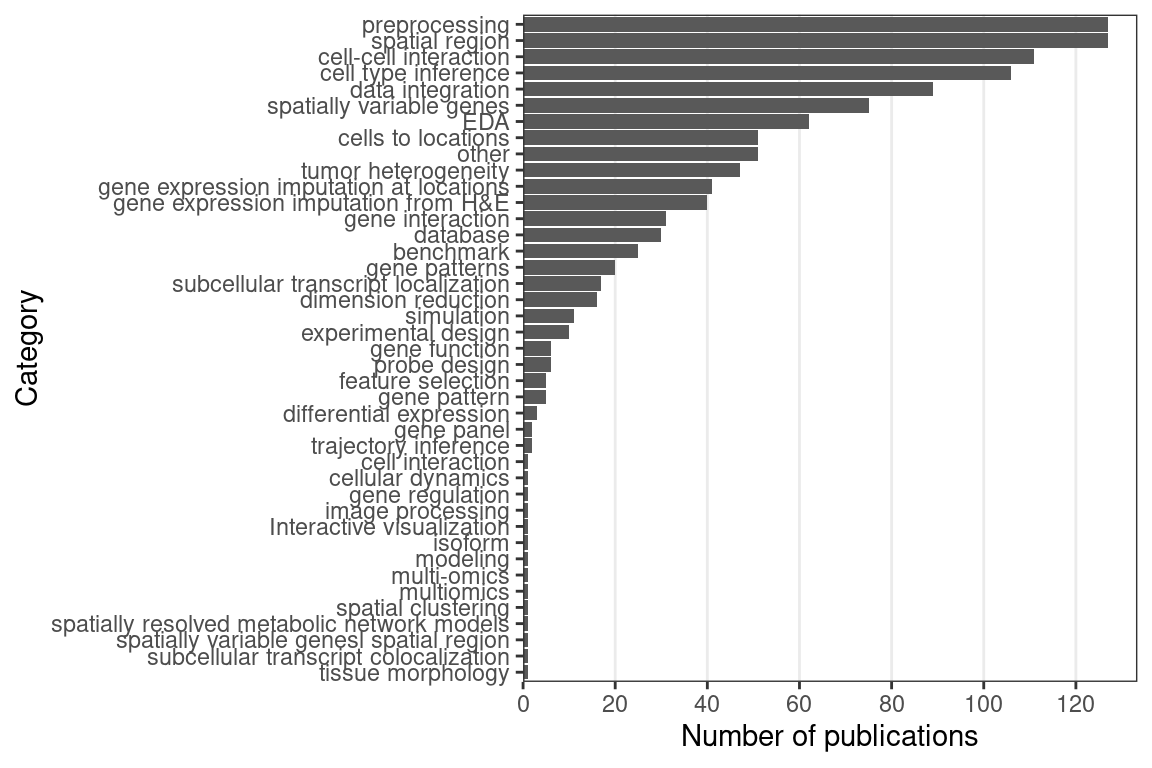

In contrast, in the prequel era, interest in data analysis peaked after the peak for data collection, and eventually interest both eventually diminished but continues (Figure 7.3). There are many different types of data analysis, the ones with the most interest are finding spatial regions, preprocessing (including image processing and quality control), cell type inference (especially cell type deconvolution of Visium spots), and cell-cell interaction (Figure 7.4). While mapping dissociated cells to spatial locations on a spatial reference used to be at the top, there has been more interest in the other topics mentioned just now.

Figure 7.4: Number of publications for each category of data analysis; note that the same publication can fall into multiple categories.

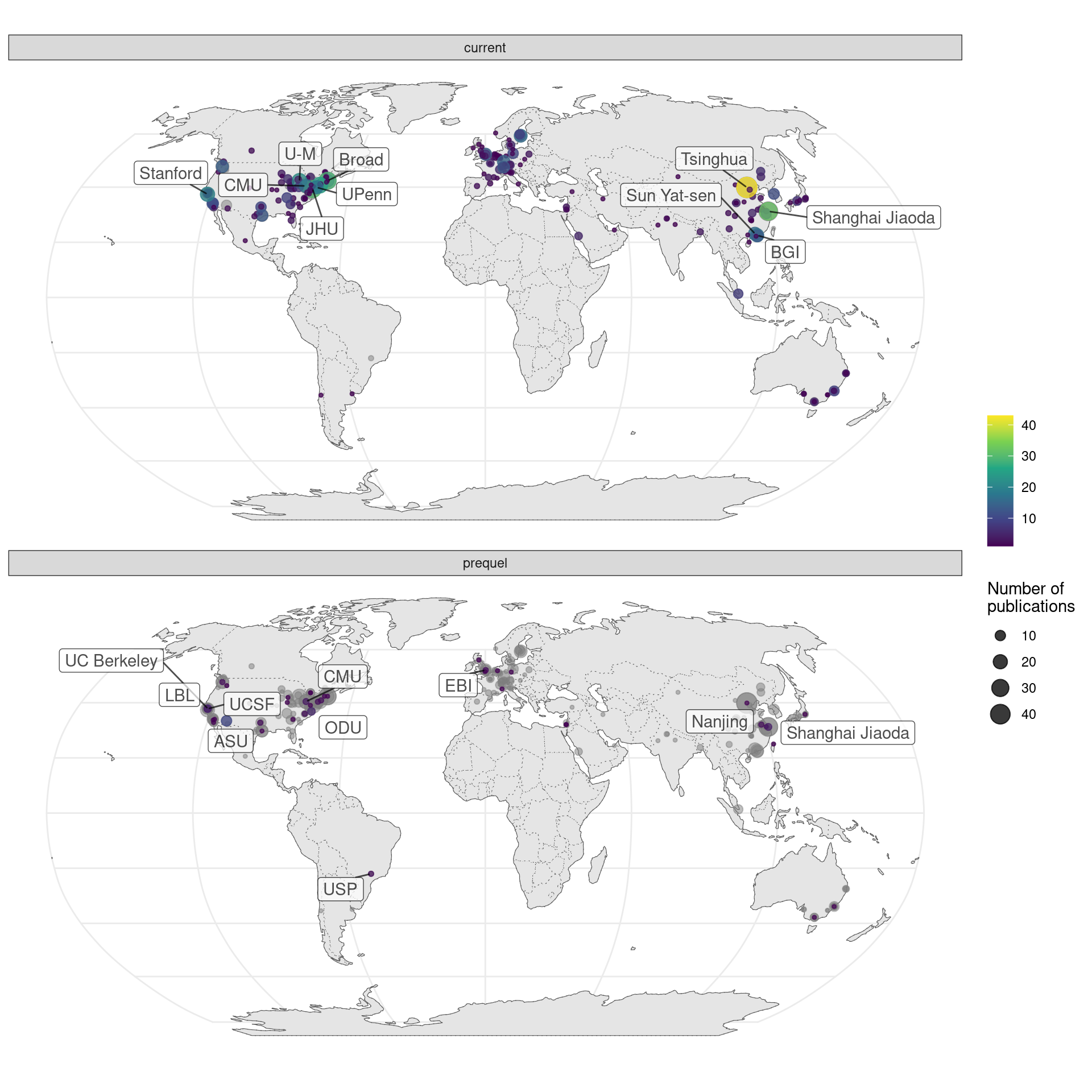

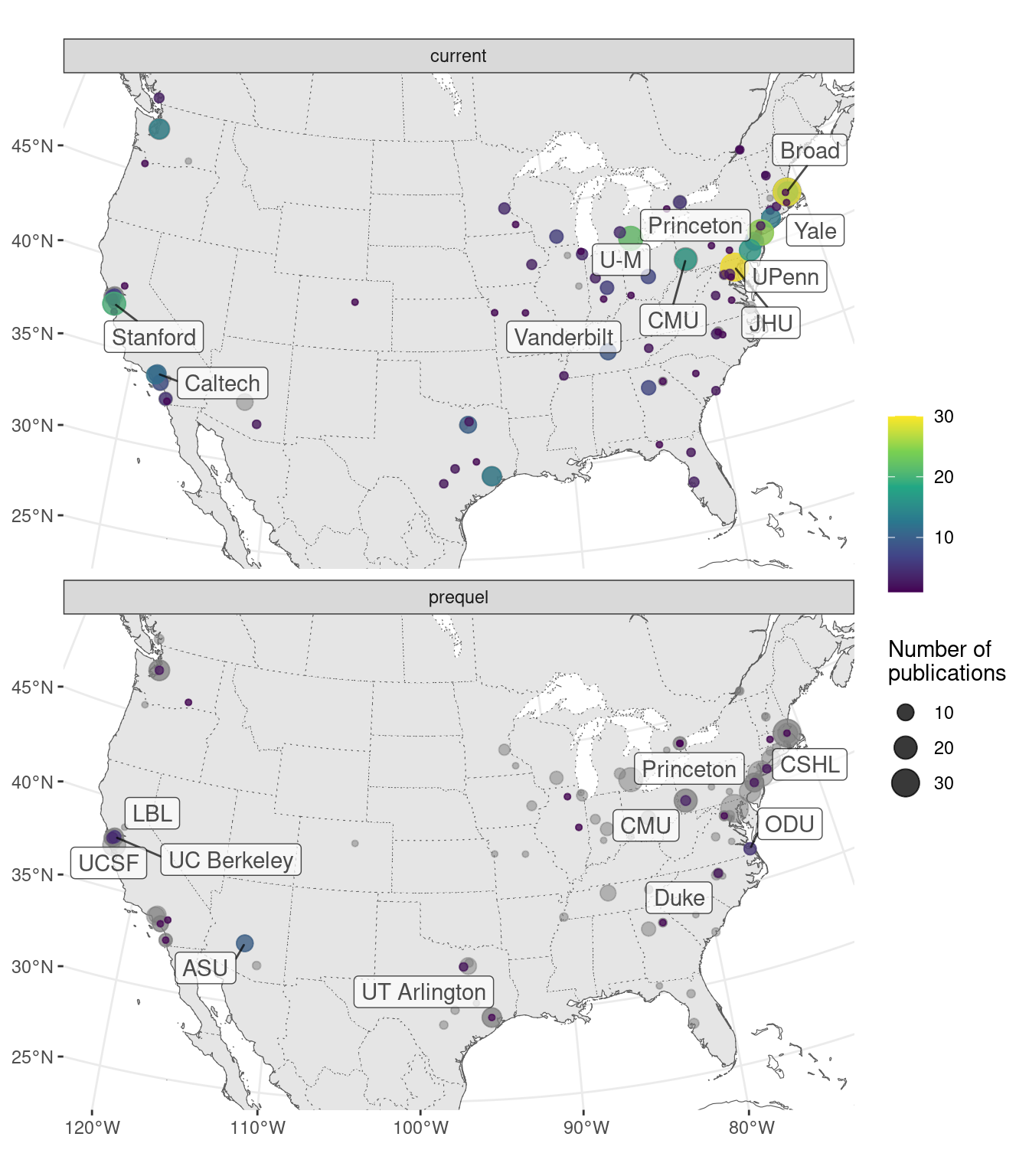

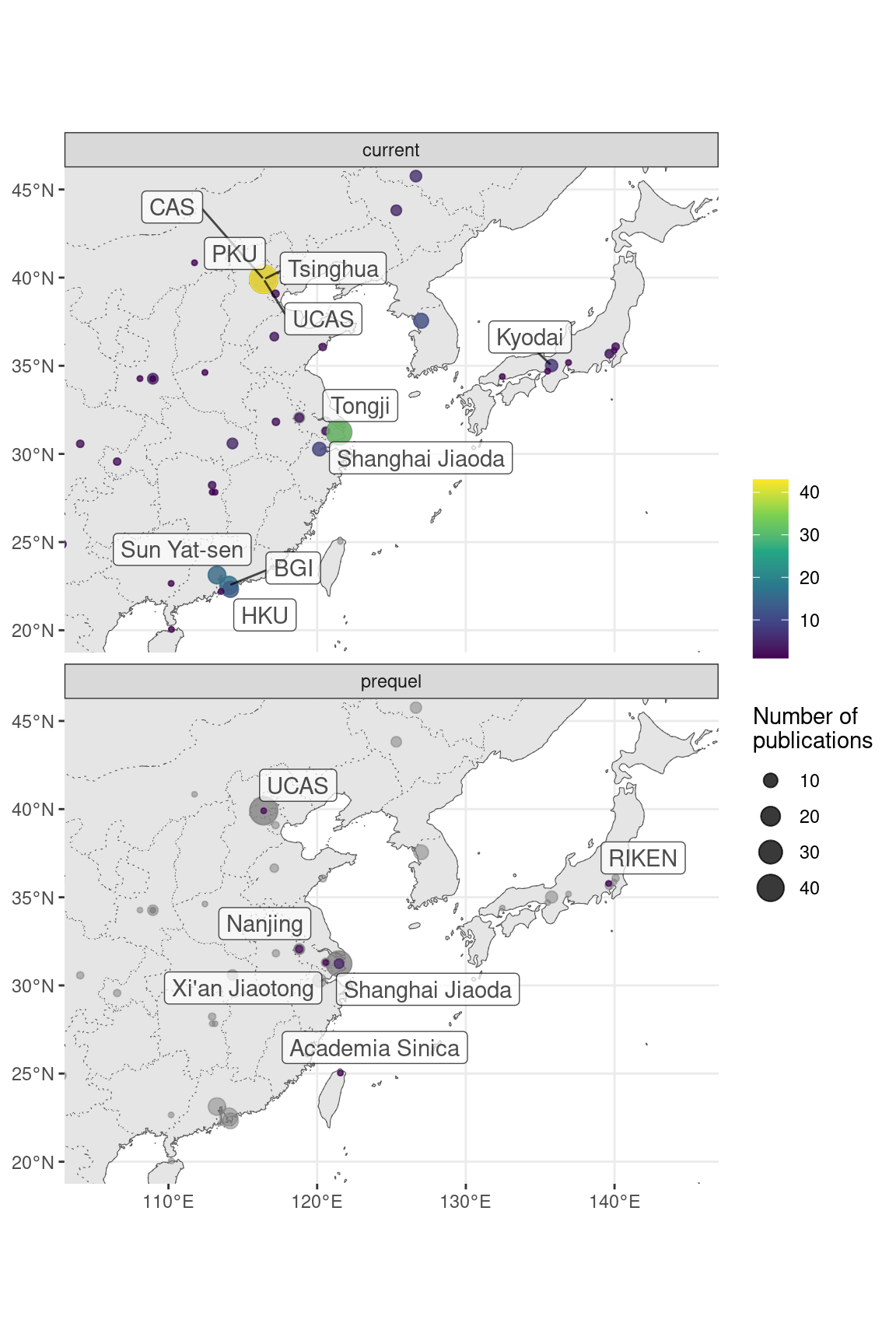

Several methods for cell type deconvolution in array based techniques that don’t have single cell resolution were developed (cell type inference), but the drastic growth in data analysis seems to be driven by multiple categories of analyses (Figure 7.5). Top contributors to data analysis methods in the current and prequel eras are different as well. In the current era, while many less well-known institutions have contributed to data analysis, the top contributors are an elite club. Among the top contributors in the prequel era are less famous institutions such as Arizona State University (ASU), Old Dominion University (ODU), and Lawrence Berkeley National Laboratory (LBL), which developed the BDTNP and the Fly Enhancer atlases (Figure 7.6).

Figure 7.5: Number of publications over time broken down by type of data analysis. The 3 categories most popular in the past year are shown, and the others are lumped into ‘Other’. Bin width is 90 days.

Figure 7.6: Map of where first authors of current era and prequel data analysis papers were located as of publication. Each point is a city and point size is number of publications from all institutions in the city. Top 10 institutions in each era are labeled.

Figure 7.7: Map of where first authors of current era and prequel data analysis papers were located as of publication around continental US. Top 5 institutions in each era are labeled.

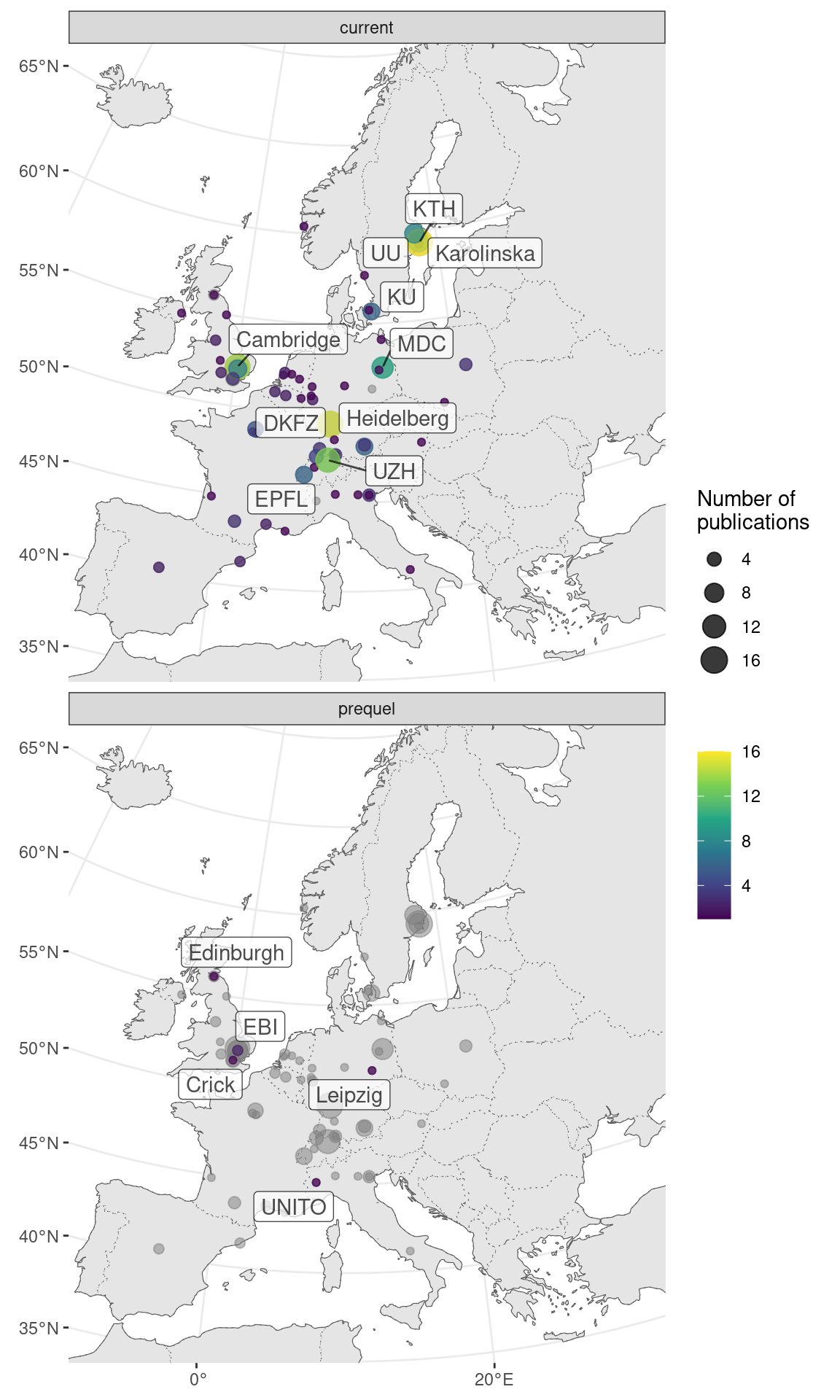

Figure 7.8: Map of where first authors of current era and prequel data analysis papers were located as of publication in western Europe. Top 5 institutions in each era are labeled.

Figure 7.9: Map of where first authors of current era and prequel data analysis papers were located as of publication in northeastern Asia. Top 10 institutions in each era are labeled.

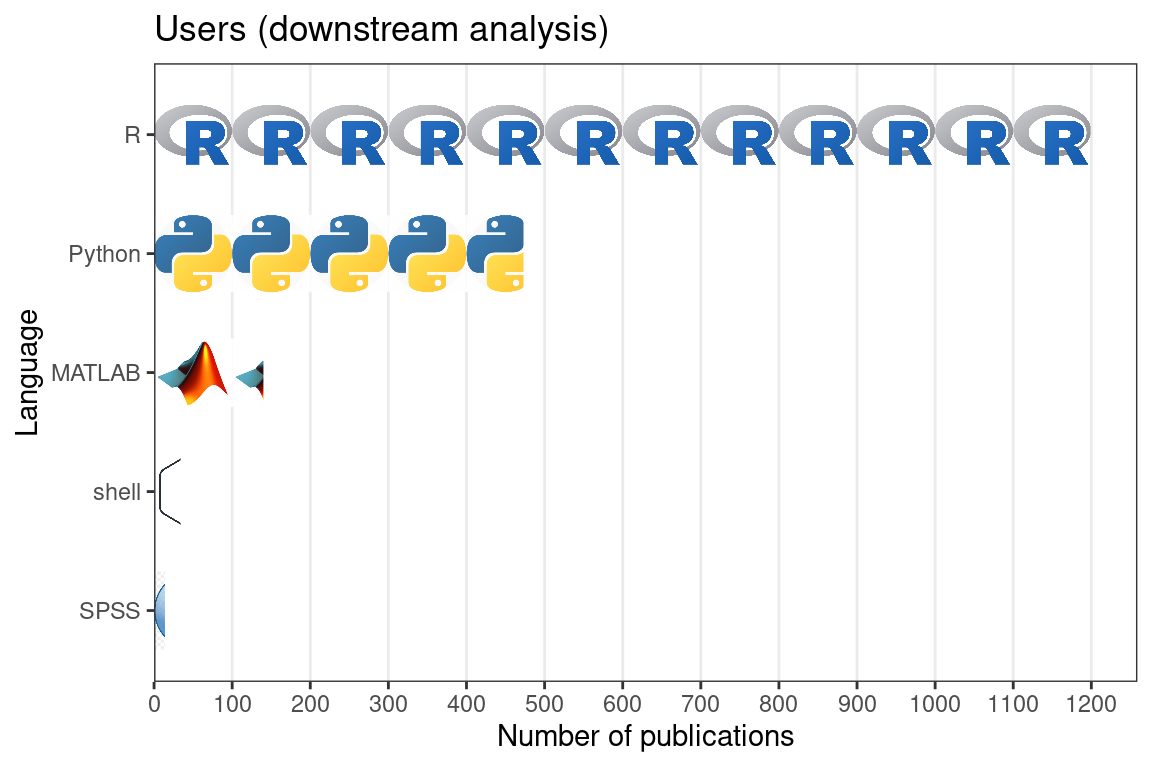

In our database, we have recorded programming languages used in data analysis or package development. All programming languages that played a major role in the project were recorded. For downstream analysis, this includes languages of the user interface of existing packages used and languages of new functions written for the project. For package development, this includes any language used to write the package essential to the functioning of the package. In publications that focus on data collection, R is by far the most popular programming language used in downstream data analysis (Figure 7.10). The second most popular is Python, and then MATLAB, which is more common in smFISH (Figure 5.28) and ISS for its image processing functionality. Python is used for both image processing and other types of analyses. C and C++ are not as common in downstream analysis.

Figure 7.10: Number of publications for data collection using each of the 5 most popular programming languages for downstream data analysis.

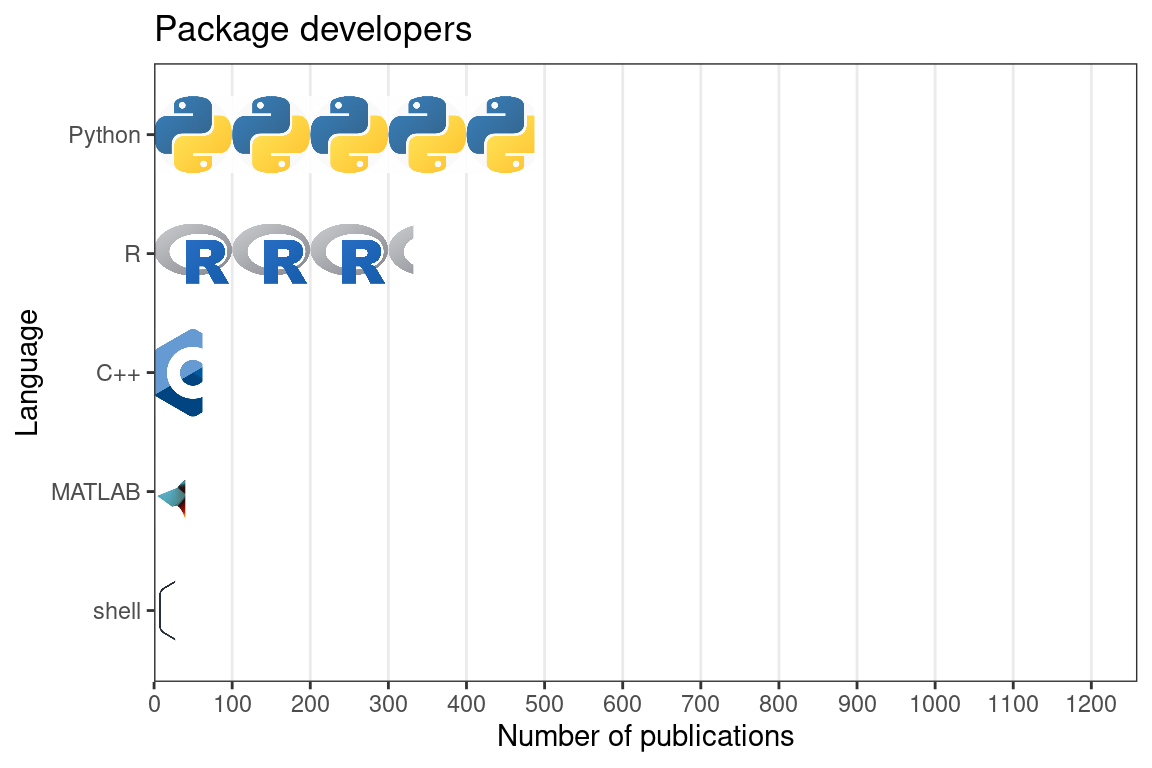

Figure 7.11: Number of publication for data analysis using each of the 5 most popular programming languages for package development. In this and the previous figure, each icon stands for 100 publications, and the x axes of both figures are aligned. Note that multiple programming languages can be used in one publication.

The same top 5 programming languages are the most common for developing data analysis packages (Figure 7.11). Python is the most popular, especially for packages involving deep learning, image processing, using Torch for optimization, or are command line tools. R follows, and is more popular for exploratory data analysis (EDA) and data visualization, but sometimes both R and Python are used in the same package. Other languages aren’t nearly as commonly used for packages reported on in our database. The above observations about usage of R and Python seem to reflect the broader cultural differences between the R and Python communities; the former caters more to the users and statisticians who do not specialize in computer science, while the latter caters more to developers and computer science specialists. MATLAB is not as commonly used for package development. While popularity of Python and R have grown (and some others such as Julia), the popularity of MATLAB seems more level (Figure 7.12). C and C++ are more common in package development than in downstream analysis, but are often used in conjunction with either R or Python or both as C and C++ are used for performance while R and Python are for user interface. With packages such as reticulate, rpy2, basilisk, Rcpp, and Cython, the most popular open source languages can be made interoperable to each other to some extent, making use of the best resources from each language.

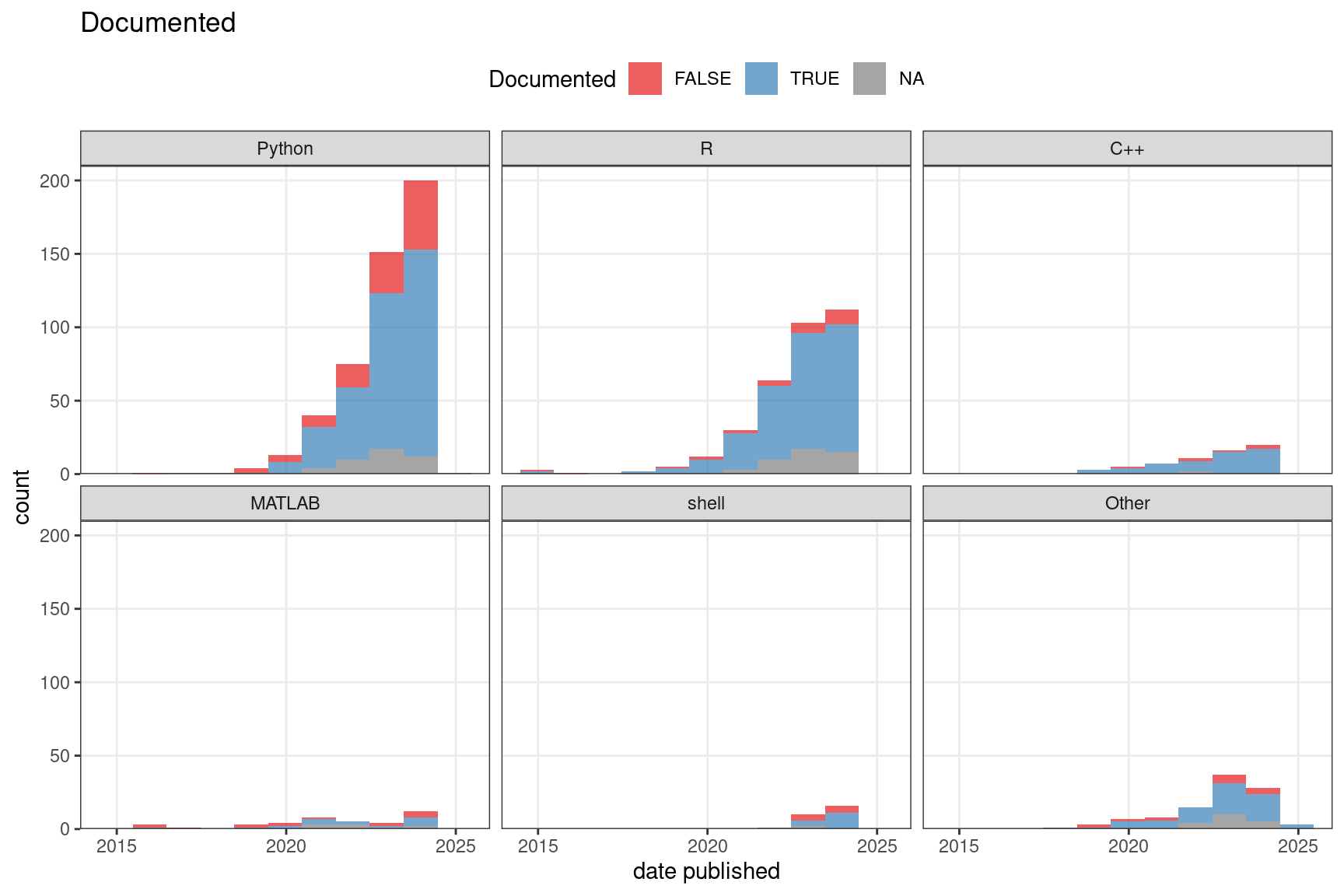

Figure 7.12: Among data analysis publications, the number of packages that are or are not well documented over time.

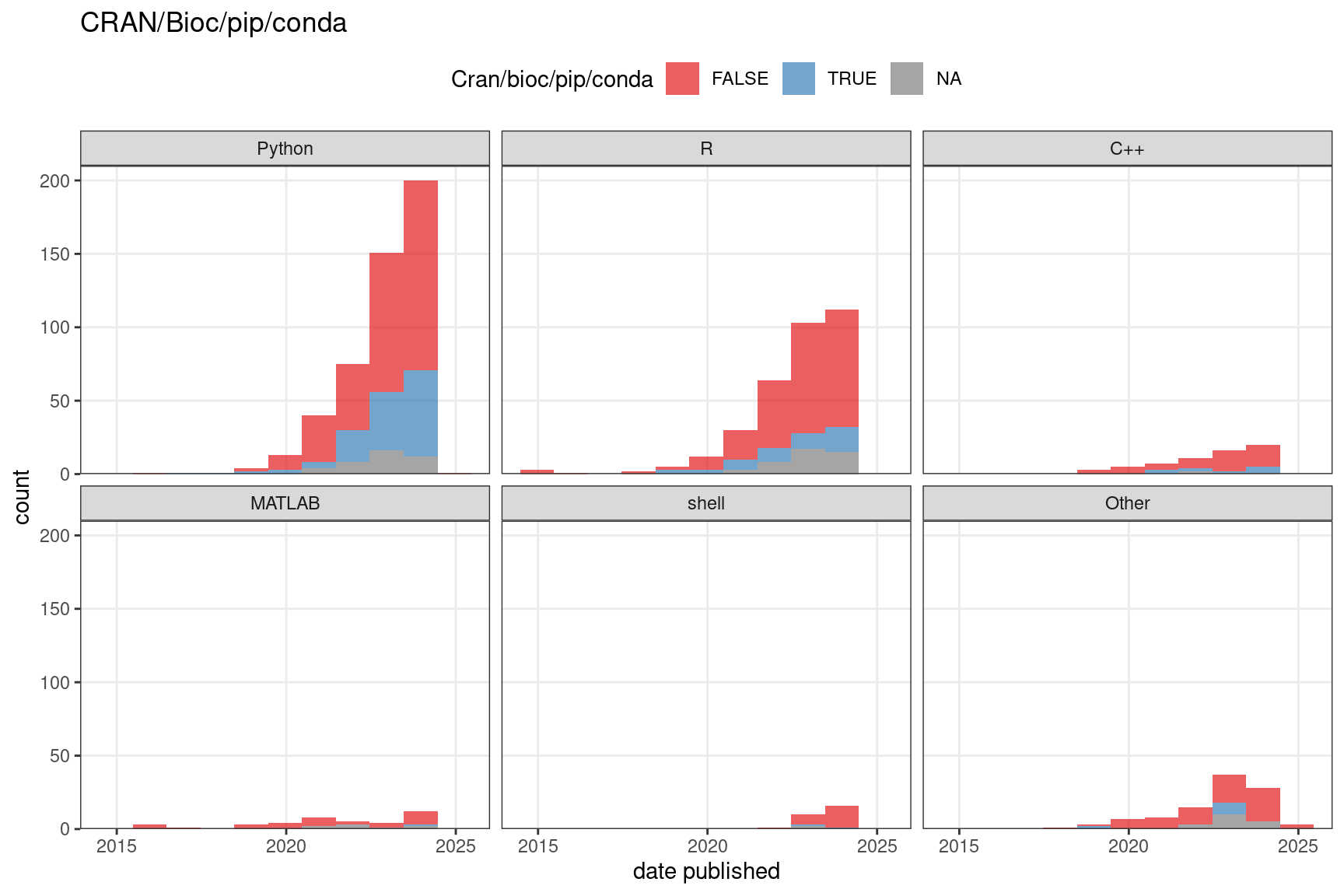

We have also recorded whether the package is well documented and whether it’s hosted on a public repository as a loose proxy of user friendliness and quality. Here “well documented” means at least all arguments of all functions exposed to the user are documented, though we consider it better when examples are included. Public repositories can to some extent indicate user friendliness and quality because the packages need to pass some sort of checking in order to be hosted on the repositories, though some repositories, such as Bioconductor, have stricter standards than others. Moreover, installation of the package is easier when the package is on a public repository. A majority of Python packages and the vast majority of R and C++ packages are well documented, while many older MATLAB packages are not though more recent MATLAB packages are also mostly documented (Figure 7.12). Most packages are not on a public repository such as CRAN, Bioconductor, pip, and conda (Figure 7.13). For CRAN and especially Bioconductor, this might be due to the work required to meet standards of these repositories such as to pass automated checks, write documentation, examples, unit tests (Bioconductor), and vignettes (Bioconductor).

Figure 7.13: The number of packages that are or are not on a public repository such as CRAN, Bioconductor, pip, or conda over time. In both C and D, the bin width is 365 days. NA means the source code repository is not available.

Some of the most popular categories of analyses (Figure 7.4) are reviewed in the rest of this section, arranged roughly in the order each task is performed in a data analysis workflow, from converting raw data to something more amenable to biological interpretations to forming biological hypotheses. The former is specific to certain types of techniques, and includes image processing (smFISH and ISS), spatial reconstruction (scRNA-seq and smFISH and ISS data that are not transcriptome wide), and cell type deconvolution (NGS barcoding data that are not single cell resolution). The latter can largely be applied across types of techniques if given a gene by cell or spot matrix and cell or spot locations. Exceptions to the “largely” include analyses of subcellular transcript location which can only be applied to single molecule resolution data and spatial point process based methods which are more appropriate to model the cell or transcript locations rather than the fixed Visium spot locations. Each category will first be defined, and the common core principles will be summarized.

7.1 Preprocessing

By “preprocessing” we mean extracting information from raw data so common analysis methods can be applied. “Raw data” can mean any form of data, even if processed in some ways, that still needs to have information extracted for common analysis tasks to apply, such as PCA, clustering, and DE. Preprocessing for array based techniques that use NGS is similar to preprocessing for scRNA-seq. The same aligners can be used to align reads to the genome or pseudoalign to the transcriptome, and the spot barcodes can be demultiplexed just like in scRNA-seq; indeed, ST and Visium, the preprocessing pipelines ST Pipeline and Space Ranger wrap the STAR aligner. In addition, the transcript spots need to be aligned to the H&E image for visualization, interpretation, and using information from H&E for analyses. As microdissection based techniques also use NGS, preprocessing would not be very different from that of scRNA-seq or bulk RNA-seq data. However preprocessing of smFISH and ISS data is very different from that of NGS based data, and this would be the focus of this section.

The rawest data the user sees is images. As mentioned earlier, preprocessing of images was typically performed with poorly documented MATLAB code difficult to decipher by users. While some switched to Python recently, such as in MERlin for MERFISH, the preprocessing tool is still often specific to the technique of interest. The HybISS group has switched from MATLAB to Python and used starfish for spot detection and decoding, and pciSeq from this group has been reimplemented in Python (originally MATLAB) as well (Gyllborg et al. 2020; Qian et al. 2020). Some groups used GUI based tools such as Fiji, ImageJ, and CellProfiler (Shah et al. 2018; W.-T. Chen et al. 2020; Sountoulidis et al. 2020). However, as the GUI based analyses are not recorded and shared or are manual, it is difficult to reproduce such analyses.

To provide a free, open source, and well-documented preprocessing tool applicable to data from multiple techniques, the Chan Zuckerberg Initiative developed the Python package starfish implementing image registration, spot calling, barcode calling, cell segmentation, and etc. with classical image processing methods such as thresholding, image registration by translation, top hat filtering, Laplacian of Gaussian, watershed segmentation, and etc. While a good start, it’s not clear how to apply starfish to multiple FOVs based on its tutorials. To improve starfish, another Python pipeline, SMART-Q was developed, with more modularity and improvements upon starfish such as additional parameter to mitigate over-segmentation (individual cell or nucleus broken into too many pieces) by watershed and integration with immunofluorescence images of marker genes (X. Yang et al. 2020). However, SMART-Q was only demonstrated in RNAscope data without combinatorial barcoding, with one FOV at a time. Another such smFISH pipeline based on classical image processing is dotdotdot, which is written in MATLAB but the functions are well documented (Maynard et al. 2020). Again, dotdotdot was only demonstrated on RNAscope without combinatorial barcoding. There are other open source tools for one or more of the preprocessing steps, but are not meant to be a comprehensive pipeline. Below we review each step in preprocessing of smFISH and ISS raw data, how this was done in the original papers of datasets with classical image processing, and alternative and improved approaches such as ones based on deep learning or Bayesian statistics.

The packages mentioned in this section are summarized in the Table 7.1. The package names link to the code repo if available, and the titles link to the paper associated with the package. Each section in this chapter has a table like this. There are relevant packages not mentioned in this book; they can be found in the database.

7.1.1 Image registration

First, images of each FOV from different rounds of hybridization must be aligned; this is image registration. The images can be aligned to a reference of fiducial beads or DAPI staining, which is especially useful when “no fluorescence” is part of the barcode (K. H. Chen et al. 2015; Chee Huat Linus Eng et al. 2019). If “no fluorescence” is not involved, then the reference can be a particular round of hybridization (Shah et al. 2016; X. Wang et al. 2018). Image registration is usually affine, i.e. images are translated, scaled, or rotated to match the reference, and often only translation is used. However, non-linear registration has been used in case the sample does not lie flat and chromatic aberration shifts spots in different channels (Qian et al. 2020).

7.1.2 Spot and barcode calling

Then the spots representing individual transcripts are identified (spot calling). The background of autofluorescence and non-specific hybridization is often removed by thresholding or top hat filtering, only preserving brighter pixels. Spots can be identified with multi-Gaussian fitting with fixed width, which can distinguish between partially overlapping spots (K. H. Chen et al. 2015), or tightened by Lucy-Richardson deconvolution (Jeffrey R. Moffitt et al. 2018), or by identifying local maxima in intensity after identifying potential spots with Laplacian of Gaussian (Shah et al. 2016; X. Wang et al. 2018). The spots can also be identified with deep learning. In Python package graph-ISS (Partel et al. 2019), a convolutional neural network (CNN) is pretrained on manually annotated candidate signal spots from another dataset, and probability that a new candidate obtained after top hat filtering and h-maxima transform is a signal is returned by the last softmax layer of the CNN. Another CNN based spot calling tool is deepBlink (Eichenberger et al. 2020), which builds on the popular U-net architecture.

Once spots are called in each round of hybridization, spots that most likely to correspond to the same transcript are read as barcode and decoded to identify the gene encoded by the barcode (barcode calling). As image registration is imperfect, the spot coming from the same transcript may still be slightly shifted between rounds of hybridization. To identify the barcode from the rounds of hybridization, the spot in one round of hybridization is typically identified with a spot in another round if the spatial distance between the two is sufficiently small, such as less than between 1 and 3 pixels, or smaller than the distance to a barcode that contains error (Shah et al. 2016; X. Wang et al. 2018; Jeffrey R. Moffitt et al. 2016; Chee Huat Linus Eng et al. 2019).

In graph-ISS (Partel et al. 2019), spots identified from CNN from different rounds of hybridization are connected in a graph, with each spot in each round of hybridization a node and the edge weight decreases with increasing distance between spots across rounds up to a maximum distance. Edges connecting spots not from consecutive rounds are removed. The barcode is called by maximum flow of minimum costs between the sink and the source of the graph. Then a quality score is calculated for the barcode according to the CNN probability of spots and distance between spots from different rounds. Although graph-ISS was originally designed for ISS data, it might be adapted to seqFISH, HybISS, STARmap, and SCRINSHOT as well. However, for MERFISH and seqFISH+, in which a transcript may not have signal in some rounds of hybridization, graph-ISS would need to be altered. Alteration would also be required to decode STARmap’s 2 base encoding.

For MERFISH specifically, transcript counts have been statistically modeled in the Rust package MERFISHtools, which takes errors in barcode calling into account (Köster, Brown, and Liu 2019). While MERFISH’s inbuilt error correction (HD4) accounts for 1 to 0 error, which is more common, 0 to 1 errors can still occur, and there are still barcodes with so many errors that they can’t be matched to genes (dropout). The errors are modeled as a multinomial distribution with event probabilities as probabilities of identifying transcripts of a gene correctly with and without the inbuilt correction, misidentifying transcripts of a gene as those from each other gene with and without the inbuilt correction, and dropouts, with actual transcript counts, number of correct and incorrect identifications, and dropouts as latent variables to be estimated by Bayesian inference. The flat prior is used for now.

Computational methods to overcome optical crowding and to deconvolute spots were summarized in Section 5.2.3: corrFISH, BarDensr, and ISTDECO. The above mentioned spot calling methods all treat spot detection and decoding as separate tasks. In contrast, in both BarDensr and ISTDECO, the two related tasks are performed jointly.

7.1.3 Cell segmentation

To assign transcript spots to cells, the cells need to be segmented and spots within the segmented boundary of a cell must be assigned to that cell. For neurons, Nissl staining, which stains the cell body and dendrites but not axons, has been used for cell segmentation (Shah et al. 2016; X. Wang et al. 2018). Without Nissl staining, total poly-A staining can be used instead, and segmented with watershed transform, although poly-a staining concentrates in the cell body and misses cellular processes such as dendrites (Jeffrey R. Moffitt et al. 2018). This misses some interesting biological information; dendrites can have different transcriptomes from the cell body of the same neuron, both in vitro and in vivo (Middleton, Eberwine, and Kim 2019; Mattioli et al. 2019; Farris et al. 2019). Cell segmentation can be done manually as automated methods may not be sufficiently reliable and would still require manual inspection and correction, or automated with machine learning models trained by manual segmentation of smaller number of cells such as the random forest model in Ilastik (X. Wang et al. 2018; Lohoff et al. 2021) and CNN models such as DeepCell (Valen et al. 2016) and CellPose (Stringer et al. 2020). Watershed segmentation is more commonly used.

Without seeing the actual extent of the cell, the quality of manual segmentation is questionable, especially in regions with high cell density, thus limiting the performance of machine learning models. Sometimes problematic methods were used to segment cells, such as 3D Voroni tessellation (Shah et al. 2016) and convex hull of Nissl staining based segmentation (X. Wang et al. 2018); these are problematic because cells need not to take a convex shape so such segmentation may mis-assign transcripts from other cells, or to be conservative about mis-assigning transcripts from other cells, miss transcripts that in fact belong to the cell of interest. However, one study did specifically stain for membrane bound proteins for the actual extent of the plasma membrane and accurate cell segmentation (Lohoff et al. 2021).

To address the challenges of cell segmentation, segmentation methods utilizing scRNA-seq data with annotated cell types have been developed recently. One such method is Python package JSTA (Littman et al. 2020), in which a deep neural network (DNN) learns a segmentation and cell type annotation using the information from a scRNA-seq reference with cell type annotations. First, watershed is used for an initial cell segmentation, both MERFISH and scRNA-seq data are scaled and centered. Then a DNN is trained on the scRNA-seq data to predict cell type from gene expression. Then a separate DNN is trained to refine the cell boundaries iteratively with expectation maximization (EM): The cell type classifier is applied on the watershed segmented MERFISH data to classify putative cells (E). Then a random subset of the pixels are used to train the pixel classifier, maximizing a loss function comparing the new pixel cell type probabilities to the initial/previous assignment (M). The new cell type probabilities are then scaled per pixel according to distance to nuclei. Only probabilities of cell types of neighboring cells are kept and the other cell types are assigned probability 0. The new cell type probabilities of each pixel is then used as event probabilities of a multinomial distribution and randomly assign a new cell type label to the pixel. Then the new cell type assignment to pixels is used to train the pixel classifier again, until the cell type assignments converge. This may refine boundaries between neighboring cells of different types, and the initial watershed boundaries are kept for neighboring cells of the same type. A problem with this package is that inhomogeneous transcript localization is not taken into account.

7.1.4 Alternatives to cell segmentation

Due to the challenges in accurate cell segmentation, some analysis methods did away with cell segmentation altogether, directly using the transcript locations. In the Julia package Baysor (Petukhov et al. 2020), based on Markov random field (MRF), which encourages nearby transcripts to take the same label. A spatial neighborhood graph is constructed with Delaunay triangulation with each transcript as a node. The probability of each transcript taking each label is modeled with a MRF and initial edge weights decrease with distance. This package first distinguishes between intracellular transcripts and extracellular background. Then it can also assign transcripts to cell types without cell segmentation, with a scRNA-seq reference with cell type annotations; as locations of the transcripts are known, this amounts to annotating tissue regions with cell types. It can also segment cells, with existing segmentation and staining (e.g. Nissl, DAPI, and poly-A) as priors. Cell segmentation can also be informed by cell type labels, so transcripts from different cell types are not assigned to the same cells. Each of the three functionalities, identifying intracellular transcripts, cell type annotation of transcripts, and cell segmentation, is based on a different MRF model. The parameters of the model, such as edge weights, labels of other transcripts, and etc. are estimated with EM. The drawbacks of this package are that its current implementation is limited to 2D and it does not take inhomogeneous subcellular transcript localization into account.

Besides cell type annotation of transcripts based on MRF, another segmentation-free method is also described in the Baysor paper (Petukhov et al. 2020), in which the \(k\) nearest neighbors of each transcript are taken to be a pseudo-cell and analyzed by standard scRNA-seq data analysis methods such as clustering, PCA, and UMAP. For ISS, transcripts can be probabilistically assigned to cells and cells to cell types, with pciSeq (Qian et al. 2020). Briefly, spatial locations of transcripts are modeled by a Poisson point process whose intensity is scaled by a term following Gamma distribution to give the negative binomial distribution of transcript counts in cells. The intensity for each gene and each cell is also informed by distance between transcripts and nucleus centroids (from DAPI), scRNA-seq data of the cell type this cell belongs to, and the detection efficiency of ISS. The data consists of locations of transcripts and the genes they come from. The unknown parameters, such as probability of each transcript to come from each cell and each cell from each cell type, are estimated by variational Bayesian inference. Cell types and spatial domains can also be identified without scRNA-seq cell type annotations as well.

In the Python package SSAM (Jeongbin Park et al. 2019), transcript density is first estimated with Gaussian kernel density, which is then projected into a square lattice. Local maxima of transcript density are taken as pseudo-cells and clustered to infer de novo cell types. Then tissue domains are identified by clustering sliding windows of spatial cell type maps. Tissue domains can also be identified without appealing to cell types.

In the Python package spage2vec (Partel and Wählby 2020), graphs are constructed by connecting each transcript spot to its neighbors within a certain distance such that at 97% of all transcript spot are connected to at least one neighbor. Then the transcript spots with these graphs are projected by a graph neural network (GNN) into a 50 dimensional space which is informed by the graphs and thus local neighborhoods of transcripts. The transcript spots in the 50 dimensional space can then be clustered or projected to 2 or 3 dimensions with UMAP to show tissue domains.

7.2 Exploratory data analysis

After data preprocessing, as described above, for array or microdissection based data, we get a gene count matrix with locations of voxels, and for smFISH and ISS based data, we get locations of transcripts, and if cell segmentation is performed, a gene count matrix and cell boundaries as well. For scRNA-seq, Seurat (Stuart et al. 2019), scanpy, and packages surrounding SingleCellExperiment on Bioconductor such as scran and scater implement further preprocessing of the gene count matrix, such as data normalization and scaling, as well as basic EDA methods to inspect and create an overview of the data, such as quality control (QC), data visualization, finding highly variable genes, dimension reduction, and clustering, and have user friendly tutorials, consistent user interface, and decent documentation. Such integrative EDA packages, as well as more specialized data visualization packages, have emerged for spatial transcriptomics as well, and are reviewed in this section.

In practice, spatial transcriptomics data is often analyzed with standard scRNA-seq analysis at the EDA stage, with one or more of PCA, tSNE, UMAP, clustering cells or spots, and finding marker genes for clusters, and differential expression (DE) between case and control (Shah et al. 2016; Jeffrey R. Moffitt et al. 2018; M. Zhang et al. 2020; Moncada et al. 2020; Berglund et al. 2018). For ST and Visium, the data is also often normalized like in scRNA-seq with CPM or classical Seurat log normalization and scaling (Moncada et al. 2020; A. L. Ji et al. 2020; Berglund et al. 2018). Seurat also implements data integration, which has been used to transfer cell type labels from scRNA-seq to Visium for cell type deconvolution (Mantri et al. 2020), and can potentially be used to impute gene expression in non-transcriptome wide spatial data from scRNA-seq (discussed in Section 7.3). Then the clusters, marker genes, and genes of interest from scRNA-seq are often visualized within spatial context, and some studies proceed to other analyses that utilize the spatial information. Due to the relevance of scRNA-seq data normalization, EDA, and data integration to spatial data, the existing scRNA-seq ecosystems of Seurat, scanpy (spatial part in Squidpy (Palla et al. 2022)), and SingleCellExperiment (spatial part in SpatialExperiment (Righelli et al. 2022)) are adapting to the rise of spatial transcriptomics, with new data structures, visualization of gene expression and cell metadata (e.g. total UMI counts, cluster, and cell type) on the spatial coordinates, with H&E as background for ST and Visium, and perhaps other spatial functionalities such as spatial neighborhood graphs and spatially variable genes.

There are other EDA packages not originating from an existing scRNA-seq EDA ecosystem as well. R packages Giotto (Dries et al. 2021), STUtility (L. Bergenstråhle et al. 2020), and SPATA (Kueckelhaus et al. 2020) not only support basic QC and EDA functionalities like those in Seurat, but also spatial analyses not supported by Seurat. These packages are well documented, but are not (yet?) on CRAN or Bioconductor.

Giotto has two main parts: Giotto Analyzer and Giotto Viewer. Besides basic Seurat functionalities and spatial data visualization, Giotto Analyzer implements several types of spatial analyses to be reviewed in more detail in the rest of this section: cell type enrichment in spatial data without single cell resolution, identifying spatially variable genes, gene co-expression patterns, cellular neighborhoods, interactions between cell types and ligand-receptor pairs in such interactions, and genes whose expression is associated with cell type interactions. However, the methods implemented in Giotto tend to have simpler principles than those of more specialized packages for each of the above tasks, such as hypergeometric test for cell type enrichment and spatially coherent genes, though Giotto wraps specialized packages such as SpatialDE (Svensson, Teichmann, and Stegle 2018), trendsceek (Edsgärd, Johnsson, and Sandberg 2018) for spatially variable genes, and smfishhmrf (Q. Zhu et al. 2018) to identify spatial cellular neighborhoods. Giotto Viewer provides interactive visualization of the data. As Giotto uses its own object class to store data, interoperability with other single cell and spatial software becomes more challenging given the popularity of Seurat and SingleCellExperiment.

In contrast, STUtility develops upon the Seurat class, so is interoperable with other Seurat functionalities. STUtility is specific to ST and Visium, while Giotto applies to all spatial technologies with cell or spot level data. Beyond Seurat, STUtility enables masking the array to remove spots outside the tissue, alignment of multiple sections, manual annotation and alignment with shiny, visualization of the aligned sections in 3D, finding neighbors of spots of a given type, and using NMF to identify archetypal gene expression patterns. While Giotto and STUtility might not have the most sophisticated spatial analysis methods, their main advantage is akin to that of Seurat and SingleCellExperiment, namely that multiple analysis tasks, often with a variety of algorithms for each task, can be done with the same object class and user interface, saving the time and trouble on learning new syntax and converting objects to new classes.

SPAtial Transcriptomic Analysis (SPATA), while implementing its own class, uses Seurat for data normalization and dimension reduction. SPATA also implements functions to visualize spatial data and a shiny app for not only interactive data visualization but also manually setting spatial trajectories and annotation of spatial regions. It also wraps Monocle 3 (J. Cao et al. 2019) for pseudotime analysis and SPARK (S. Sun, Zhu, and Zhou 2020) for finding spatially variable genes. In addition, SPATA implements its own method of finding spatially variable genes, reviewed in Section 7.5.

Some R packages have also been written for specific visualization tasks, but not the entire EDA process. Spaniel is a package that builds on Seurat and SingleCellExperiment for interoperability and implements QC plots that help the user to remove ST or Visium spots outside the tissue. However, Spaniel’s main difference from STUtility is that Spaniel can create a shiny app for interactive visualization and exploration of the data. While this may make Spaniel sound unremarkable, it was written about a year before Seurat supported spatial data. Another specialized package is SpatialCPie (J. Bergenstråhle, Bergenstråhle, and Lundeberg 2020), which also uses shiny for interactive visualization. SpatialCPie cluster ST or Visium data at multiple resolutions and plots a graph showing how clusters from one resolution relates to those from other resolutions. It also plots a pie chart at each ST or Visium spot, on top of an H&E background, showing similarity of each spot to each cluster, to give a more nuanced view than simply coloring the spots by cluster. Both packages are on Bioconductor.

7.3 Spatial reconstruction of scRNA-seq data

It may be fair to say that the holy grail of spatial transcriptomics is to profile the whole transcriptome at single cell resolution and without dropouts. We have already seen that, with seqFISH+ and ExM-MERFISH, this goal seems to possibly be within reach. However, the goal may be further than is seemingly the case, as the smFISH based techniques are still not generally applied to more than a few dozens to a few hundreds of genes, in the order of 10,000 cells (Figure 5.23, Figure 5.24), which only covers a small area of tissue. Meanwhile, techniques without single cell resolution and with lower detection efficiency but can cover large swaths of tissue have grown in popularity (Figure 5.37). Hence spatial transcriptomics has not supplanted scRNA-seq – which has also grown tremendously in popularity in recent years (Svensson, Veiga Beltrame, and Pachter 2020) – but remains a complement. Spatial data that is not transcriptome wide can be complemented by scRNA-seq for information of other genes; this section reviews computational methods that map cells from scRNA-seq to spatial locations with a small panel of landmark genes and/or to impute gene expression not profiled by the spatial reference in space, or in short spatial reconstruction of scRNA-seq data. These are the most common types of data analysis(Figure 7.4). The two tasks are related but distinct, as when cells from scRNA-seq are mapped to spatial locations, spatial patterns of the genes expressed in the cells are also predicted. However, gene expression can also be predicted at spatial locations without mapping cells to the locations. Spatial data that does not have single cell resolution can be complemented by scRNA-seq for cell type deconvolution of the spots (Section 7.4). In turn, spatial data complements scRNA-seq with spatial information such as gene expression patterns and cell neighborhoods.

Attempts at spatial reconstruction of single cell data date back to 2014, when growth in the popularity of scRNA-seq started to pick up pace (Svensson, Veiga Beltrame, and Pachter 2020). Early (2014-2017) methods tend to fall in three categories: direct dimension reduction with PCA, ad hoc scoring, and pseudotime projected into space. The first two have been by and large abandoned due to their limitations, and the third isn’t commonly used. Another category is generative modeling, which we consider intermediate due to its early origin and lasting legacy as some later methods involve more sophisticated generative modeling. Later (2018-present) methods commonly involve a lower dimensional latent space shared by the scRNA-seq and the spatial data, and many different approaches have been tried to obtain the shared latent space and project it back into the higher dimensional space of gene expression. However, other principles were used as well, such as optimal transport, nonlinear direct dimension reduction, black box machine learning, mixture of experts model, and etc.

7.3.1 Direct dimension reduction

As already mentioned in our summary of Puzzle Imaging, spatial reconstruction of dissociated tissue can be considered a dimension reduction problem. Here with scRNA-seq, the high dimensional gene expression data is directly projected to 1 to 3 dimensions that correspond to the spatial dimensions.

One of the earliest reconstruction methods (2014) maps single cell qPCR data onto a sphere that mimics the developing mouse otocyst (R. Durruthy-Durruthy et al. 2014). Ninety six genes were profiled with qPCR in single cells, and the gene expression profiles were projected to the first 3 principal components (PCs), which are then projected onto the surface of a sphere. The sphere is oriented on the dorsal-ventral (DV), anterior-posterior (AP), and left-right (LR) axes by expression of marker genes known to be expressed in one end of those axes. At least for the otocyst, this approach seemed to recapitulate expression patterns of many genes, at least qualitatively, at the resolution of octants. This approach was later adapted to reconstruct the human (J. Durruthy-Durruthy et al. 2016) and mouse (Mori et al. 2017) blastocysts. A one dimensional version of this approach was also adapted to spatially reconstruct cells from the organ of Corti along the apical and basal axis, though the PCA was performed only on DE genes between apical and basal cells and 2 PCs were projected to 1 dimension (Waldhaus, Durruthy-Durruthy, and Heller 2015).

Direct dimension reduction is still used after 2018, with dimension reductions other than PCA. Another form of dimension reduction for spatial reconstruction is the self-organinzing map (SOM) as in the package SPRESSO (Mori et al. 2019). The Geo-seq mid-gastrula mouse embryo data (G. Peng et al. 2016) was reconstructed in 3D with genes selected from GO terms; 18 genes selected from a few GO terms could place all microdissected samples into the correct AP/LR quadrant with SOM. However, such genes were found by checking the SOM projections from thousands of GO combinations against the Geo-seq ground truth and may not apply to other biological systems. Also, the spatial reconstruction along the DV axis was not checked, though in Geo-seq, the samples were microdissected along the DV axis with a cryotome in addition to dissection into AP/LR quadrants with LCM.

A more recent, graph based dimension reduction is GLISS (Junjie Zhu and Sabatti 2020). After using a Laplacian score based method to identify landmark genes from spatial data (to be reviewed in Section 7.5), a graph is constructed for the scRNA-seq data based on similarity in expression profiles of the landmark genes among cells as a proxy to spatial locations. With this graph, a new set of genes whose expression depend on the structure of the graph, or spatially variable genes, are identified, and added to the landmark genes. A new similarity graph is then constructed with both the landmark genes and spatially variable genes, and the dimension reduction is the eigenvectors of the graph Laplacian of this graph, starting from the second eigenvector. One dimensional projection would be the second eigenvector. Two dimensional projection would be the second and third, and so on.

Ligand-receptor (L-R) pairs have also been used for direct dimension reduction, in CSOmap (Ren et al. 2020). Expression of L-R pairs in scRNA-seq cells is used to construct a cell-cell affinity matrix, with higher affinity meaning that two cells are more likely to be close to each other. Then an algorithm similar to tSNE is used to project the affinity matrix into 3 dimensions, corresponding to the physial dimensions. The Kullback–Leibler (KL) divergence between the affinity and probability of the two cells to be neighbors is minimized, with constraints of the minimum physical size of the cell and the amount of space available.

7.3.2 Ad hoc scoring

The methods above tend to only capture simple spatial patterns with simple gradients along axes, or have low resolution that is effectively restricted to octants or quadrants. More complex patterns with higher resolution can be reconstructed qualitatively with some score that measures similarity between each cell in scRNA-seq and each location in a spatial reference for the genes present in both datasets and favors genes more specific to a subset of cells. The spatial pattern of the score is the predicted gene expression pattern. As the score is qualitative and does not utilize statistical modeling of the data, this is called ad hoc scoring. The spatial reference is FISH (not smFISH) data of a panel of genes, with images for different genes registered onto a common coordinate system. As FISH is not very quantitative, both the spatial and the scRNA-seq data are binarized into “on” and “off” for each gene, and the predicted gene expression patterns based on the score is binarized as well since the score is only qualitative. Such approach is simple to implement, but the binarization misses quantitative nuances of gene expression patterns.

Ad hoc scoring has been used in Platynereis dumerilii brains; the FISH atlas was broken into voxels 3 \(\mu\)m on each side, smaller than the average single cell, and 98 landmark genes in the atlas used to predict patterns of other genes in scRNA-seq with a score (Achim et al. 2015). A different method, DistMap, uses a score based on Matthew correlation coefficient (MCC) was used to soft assign cells from scRNA-seq to locations in the BDTNP atlas with 84 landmark genes and to predict expression patterns of the other genes (Karaiskos et al. 2017). The latter method inspired the DREAM Single-cell transcriptomics challenge in 2018 (Tanevski et al. 2020), a competition in which participants select the most informative genes and predict cell locations with 60, 40, and 20 of the 84 BDTNP landmark genes. At least some participating teams adapted the scoring method used in the original DistMap after selecting genes with their own methods (Alonso, Carrea, and Diambra 2020; Pham et al. 2020).

7.3.3 Generative models

Many areas in spatial transcriptomics data analysis describe the data with a plausible statistical model and fit such a model to the data. Generative models have several advantages. First, uncertainties in parameter estimates and model predictions can be computed. Second, the model is more explainable, i.e. that humans may understand contributions of variables to the fitted model. Explainability plays an important role in models identifying spatially variable genes. As already mentioned, some of the segmentation-free smFISH or ISS analysis packages, such as pciSeq, rely on generative models. Generative models are used for spatial reconstruction of scRNA-seq data as well.

The popular scRNA-seq EDA package Seurat originated from spatial reconstruction of scRNA-seq data in 2015, to map cells from scRNA-seq to a WMISH reference with 47 landmark genes (Satija et al. 2015). The WMISH images were mostly obtained from ZFIN, and divided into 128 bins, which was then collapsed into 64 due to LR symmetry. As WMISH is not very quantitative, the WMISH reference was binarized. Due to the sparsity of scRNA-seq data, the normalized scRNA-seq data was smoothed. Then a mixture of 2 Gaussian distributions was fitted to each gene, for the “on” and the “off” states. With such distributions, the posterior probability that each cell comes from each bin can be calculated with the probability that the cell is “on” of “off” like in the bin for the 47 genes, although cells can very well have intermediate and more nuanced gene expression. The spatial centroid of each cell is the center of mass of the spatial map of the posterior probabilities. So far, the landmark genes have been assumed to be independent, which is unrealistic. Centroids that are close to actual bins are then used to calculate a covariance matrix of a subset of the landmark genes for each bin, with which the Gaussian mixture models and posterior probabilities are updated. While this model seems reasonable, it is no longer used, likely because of the advances in highly multiplexed smFISH and ISS that produced quantitative spatial references that do no need binarization for some tissues, especially the mouse brain. Nevertheless, the scRNA-seq part of Seurat lived on. As already mentioned, WMISH or ISH atlases are the only spatial transcriptomics resources available for some biological systems and most of the atlases are not transcriptome wide, so this method can still be useful.

A different generative model was used to map scRNA-seq cells to a smFISH atlas in the mouse liver (Halpern et al. 2017). Six marker genes known to be patterned in the portal-central axis of the hepatic lobule were profiled with smFISH. Then the smFISH data was binned into 9 zone, normalized, and each gene in each zone was modeled with a gamma distribution, which was then multiplied by coefficients correcting for the fact that only part of the cell is in the tissue section for the \(\lambda\) of a Poisson distribution to form a negative binomial distribution. The negative binomial distribution was sampled and normalized for the whole cells in scRNA-seq and proportion of UMIs from the gene of interest, which would approximate the distribution of a cell in each zone having expression levels of the gene of interest. The prior probability of a hepatocyte originating from each zone seems to be the relative area of the concentric ring that is each zone, centered on the central vein. With the prior and the sampled distribution of expression of marker genes, the posterior probability of each cell from each zone can be calculated with Bayes rule. To impute expression of genes other than the 6 markers in each zone, the gene count matrix is multiplied to the posterior probability matrix (after weighing the probabilities). Here the 6 markers are assumed to be independent, which might not be realistic. The same approach is still used by the same lab for more recent liver datasets (Halpern et al. 2018; Droin et al. 2020), although we are unaware of its use outside that lab.

Some of the shared latent space methods are based on generative models as well, with the latent space as part of the model. In gimVI (Lopez et al. 2019), which is adapted from scVI specifically to impute gene expression in space by integrating spatial and scRNA-seq data, gene expression in scRNA-seq is modeled with the negative binomial (NB) or zero inflated negative binomial (ZINB) distribution, and the spatial data is modeled with the Poisson or NB distribution (depending on the technology and dataset). The scRNA-seq and spatial data are modeled as coming from a shared latent lower dimensional space, which is decoded back to the higher dimensional gene expression space by a neural network to capture nonlinear structures as part of the mean parameters of the NB, ZINB, or Poisson distributions. The latent space is estimated when the model is fitted with variational Bayesian inference. To impute gene expression in space, the latent space is sampled and passed through the decoding neural network to get the mean parameters of the gene expression distributions for spatial data.

Another generative model with a shared latent space is semi-supervised t-distributed Gaussian process latent variable model (sstGPLVM) (Verma and Engelhardt 2020). The scRNA-seq or spatial data is modeled as coming from a noisy sample in high dimension from a lower dimensional shared latent space. The latent space can be concatenated to fixed covariates such as batch, technology used to collect data, spatial coordinates, and etc. and is estimated with black box variational inference. Missing data in gene expression and covariates can be estimated from the latent space, thus enabling mapping scRNA-seq cells to spatial coordinates and imputing gene expression, and the latent space can be collapsed across a covariate to remove its effect. The latent space has a Gaussian prior with identity variance. The prior of the high dimensional noiseless space is a Gaussian process with covariance between cells defined by a kernel that is a weighted sum of Matern 1/2 and Gaussian kernels to allow for a non-smooth manifold that better represents data. The input to the kernel is a weighted sum (length scales of kernel) of l1 distance between the cells in the latent space (including the covariates). The noise added to the noiseless high dimensional space to model actual data is a heavy tailed Student’s t distribution, to account for overdispersion and non-Gaussian distribution of the data. This method is not specifically designed for spatial data, but can be used to integrate different scRNA-seq datasets as well.

7.3.4 Shared latent space

There are some additional methods that project scRNA-seq and spatial data into a shared latent space to impute gene expression in space but without generative modeling. Some of them are designed for data integration in general, but included here the authors demonstrated integration of scRNA-seq and spatial data, seeming to intend their packages for such usage.

In version 3 or later of Seurat (Stuart et al. 2019), the scRNA-seq and spatial datasets are projected into a shared latent space by canonical correlation analysis (CCA), which finds a low dimensional space that maximizes correlation between the two dataset, or by projecting one dataset into a low dimensional PCA space of the other dataset. Then anchor cells are identified, as cells in the two datasets with sufficient shared neighborhood, and the weight of each anchor on each cell in the spatial dataset is calculated by ad hoc scoring favoring closeness in the latent space and more similar shared neighborhood to the anchor. Gene expression is then simply transferred from scRNA-seq to spatial data by multiplying the normalized gene count matrix of genes absent from the spatial data in scRNA-seq with the anchor weight matrix.

LIGER (Welch et al. 2019) is a different data integration method, of which a Seurat wrapper has been implemented. The latent space is inferred by integrative NMF, which finds a set of factors unique to the scRNA-seq or the spatial dataset, and a set of factors shared by both. Gene expression is imputed in spatial data by averaging the expression of genes of interest in the \(k\) (50) nearest neighbors (kNN) from the scRNA-seq data in the space spanned by the shared factors.

In SpaGE (Abdelaal et al. 2020), a common latent space is inferred as such: gene shared by the spatial dataset and scRNA-seq are used to do PCA independently for the two datasets. Then the cosine similarity matrix of the PCs of the two dataset is passed to singular value decomposition (SVD). Then the left and and right singular vectors are used to align the PCs to a common latent space of principal vectors. The original data is projected into the space spanned by the principal vectors of the scRNA-seq data. Then kNN is used to project gene expression from scRNA-seq to spatial data.

In Harmony (Korsunsky et al. 2019), the data, with different batches, is first PCA projected. Then the PCA projection is clustered with an altered k-means clustering algorithm that assigns cells probabilistically to clusters and maximizes diversity in batches in each cluster. Then the batch correction is found by mixture of expert model. In each cluster, the PCA projection is modeled by a linear combination of variables in the design matrix (containing batch information), with an intercept term for batch free variation in each cluster. The batch correction term is a weighted sum of the linear model predictions excluding the intercept term, weighted by the probabilistic assignment of each cell to each cluster. Then the batch correction term is subtracted from the original PCA projection. The clustering and correction are repeated until convergence. This way, the cells from scRNA-seq and spatial data are aligned in a common latent space. Then gene expression is imputed in spatial data with kNN.

7.3.5 Other principles

Approaches that do not fall into the categories reviewed above are reviewed in this subsection, including projecting pseudotime into space, black box machine learning, and optimal transport.

In some biological systems, cell differentiation corresponds to physical locations of the cells, so pseudotime, which supposedly arranges cells along differentiation trajectories, have been mapped to space, thus placing dissociated cells in space. For instance, in the bone growth place, cells at different stages of differentiation are physically arranged along the length of the bone in a cylinder, so the pseudotime trajectory of the cells was simply warped into a straight line for spatial reconstruction (J. Li et al. 2016). Similarly, in Drosophila larva, cell differentiation corresponds to the proximal-distal axis in the antenna disk and the AP axis in the eye disk, so cells from both scRNA-seq and scATAC-seq were binned according to pseudotime and assigned to the corresponding bins in the eye-antenna disk (González-Blas et al. 2020). However, this would not work in tissues without such neat correspondence, such as the Drosophila embryo, in which some genes are expressed in periodic patterns to specify segments.

Deep learning libraries such as PyTorch also made it more effective to predict locations for scRNA-seq cells without a pre-conceived statistical model of the data. For instance, after data normalization and batch correction, a deep neural network can be trained on ST data with annotations of spatial regions to predict spatial regions for scRNA-seq data (Ortiz et al. 2020). In addition, PyTorch’s gradient-based optimization has been used to probabilistically map scRNA-seq cells to spatial locations in Tangram (Biancalani et al. 2020). The spatial reference is voxelated, and a mapping matrix of probability of each cell mapping to each voxel is inferred by minimizing KL divergence between mapped and actual cell density in each voxel and favoring stronger correlation between mapped data and the spatial reference in expression of each gene across voxels and gene expression profiles of each voxel.

Thus far, the reconstruction methods do not take spatial autocorrelation – i.e. that cells physically closer to each other are more likely to have more similar gene expression profiles – in the spatial data into account. Optimal transport, i.e. finding a way to transport a pile of dirt from one place to others with minimum cost, has been used to exploit spatial autocorrelation to map scRNA-seq cells to spatial locations. In novosparc (Nitzan et al. 2019), neighborhood graphs are constructed for scRNA-seq in gene expression space and for spatial reference data in physical space. Then assuming spatial autocorrelation, optimal transport is used to place cells in locations to make the two graphs match. This can be done without gene expression data in the spatial grid, but can be improved with a spatial gene expression reference. In SpaOTsc (Cang and Nie 2020), first an optimal transport plan from scRNA-seq cells to spatial locations is inferred with gene expression dissimilarity matrices between scRNA-seq cells and between cells and locations and a spatial distance matrix between spatial locations. Then a spatial distance matrix for scRNA-seq cells is imputed based on that optimal transport plan. The plan can also be used to impute gene expression in space. SpaOTsc also uses optimal transport to infer cell-cell interaction, to be reviewed in the Cell-cell Interaction section. A drawback of this kind of method is that because different cell types can mix in the same spatial neighborhood, such as hepatocytes and Kupffer cells in the liver, spatial autocorrelation is not absolute.

Spatial autocorrelation can also be utilized without optimal transport, but with tensor completion in Canonical Polyadic Decomposition (CPD) form as in FIST (Z. Li et al. 2020). The spatial data can be viewed as a 3 dimensional tensor, with the x and y coordinates and gene expression at each location ((or 4 with z coordinate). CPD is used to improve computational efficiency. In CPD, the tensor is approximated with a sum of rank 1 tensors, i.e. cross products of 3 vectors, one for each dimension. This decomposition, with extra dimensions for unknown gene expressions, is found by minimizing the difference between the reconstructed tensor with the existing tensor for known genes and by favoring spatial autocorrelation of gene expression on a neighborhood graph and favoring similarity of expression of genes with similar functions in a protein-protein interaction graph.

7.4 Cell type deconvolution

There is another aspect to how spatial and scRNA-seq data complement each other. In array based techniques that do not have single cell resolution, the cell type composition of each spot can be estimated with scRNA-seq data. Perhaps because of the increasing popularity of ST and Visium, several cell type deconvolution methods have been developed in the past year, falling into four categories: negative binomial models, packages built upon linear models but without negative binomial, topic modeling, and packages not explicitly using statistical modeling. While any tool designed for cell type deconvolution of bulk RNA-seq data can be used, this section specifically focuses on cell type deconvolution tools designed with spatial data in mind.

7.4.1 Negative binomial

Cell type deconvolution can be performed by explicitly modeling spot level gene expression in terms of individual cell types, usually the scRNA-seq cell clusters. As gene expression is over-dispersed compared to Poisson and is often well modeled with negative binomial, the negative binomial distribution is often used to model gene expression in cell type deconvolution. In stereoscope (Alma Andersson et al. 2020), a negative binomial distribution is fit to the expression of each gene in each cell type in scRNA-seq data. Then at each spot, gene expression is modeled as a weighted sum of the negative binomial distributions from each cell type, and the weights are estimated by maximum likelihood estimation (MLE).

In cell2location (Kleshchevnikov et al. 2020), expression of each gene at each spot is modeled as negative binomial, parameterized with rate and dispersion. The rate is a weighted sum of cell type gene expression signatures and the weights themselves are modeled with factors to group cell types for similar cell type localization. The rate is also adjusted for technology sensitivity, which can be different between scRNA-seq and the spatial technique, and additive shifts specific to the gene and spot. The model is Bayesian and the weights and the sensitivity scaling parameter have informative priors. The parameters are estimated with variational inference. The weights can be interpreted as the number of cells of each cell type at each spot.

In DestVI (Lopez et al. 2021), expression of a gene in both the scRNA-seq reference and the query spatial dataset is modeled by a negative binomial model, parameterized with rate and dispersion, and the rate is informed by a low dimensional cell type specific latent embedding from a variational autoencoder. For the spatial data, the rate involves a weighted sum of the cell type latent vectors representing average state of the cells. These weights would be cell type proportions after normalizing so they add up to 1. The scRNA-seq and the spatial data are modeled separately. To link the two models, the scRNA-seq cell type latent vectors are used as priors for those for the spatial data, and the decoder of the model trained on scRNA-seq data is used in the model for the spatial data, as transfer learning of cell state decoding.

While not negative binomial regression per se, the negative binomial model is central to AdRoit (T. Yang et al. 2021), so AdRoit is summarized in this subsection. First, genes informative of cell types are selected, from cell type marker genes and highly variable genes. Then for each cell type, a negative binomial distribution is fitted to expression of each gene with MLE. Then the mean and variance of this negative binomial distribution are computed. This fitting is also done to each sample (in bulk RNA-seq) or spot (ST and Visium), and the mean and variance are computed. Then cell type proportions are roughly estimated in each sample with non-negative least square (NNLS), where the mean in the sample is a weighted sum of the means in each cell type, with a constant to make sure that the proportions add up to one. Then the log ratio between the actual sample mean and the predicted mean from the rough proportions (without the scaling constant) for each gene is computed as a gene specific scaling factor. Then a similar linear model of the mean per sample and mean per cell type for each gene is fitted with NNLS, with that gene specific scaling factor multiplied to the cell type proportion coefficients. In the loss function, weights are added to reduce contribution from genes not specific to a cell type or too variable across samples and the cell type proportion coefficients are L2 regularized due to collinearity of similar cell types. Then the cell type proportion coefficients are scaled to add up to 1.

7.4.2 Other (generalized) linear models

Despite the over-dispersion, the Poisson distribution is often used to model gene expression as it captures the discrete nature of transcript counts and is simpler than the negative binomial distribution. In Robust Cell Type Decomposition (RCTD) (Cable et al. 2020), gene expression at each spot is modeled as a Poisson distribution, whose mean is an expected rate scaled by total transcript count at the spot. The log rate is the sum of the log of weighted sum of mean gene expression for each cell type from a scRNA-seq reference, a fixed spot specific effect term, a gene specific platform random effect, and another gene specific random effect term for overdispersion. The parameters, including cell type weights, are then estimated with MLE.

SpatialDecon (Danaher et al. 2020) is written for GeoMX DSP, and is based on log-normal regression. As gene expression is often right skewed, log transformation is commonly used to pull the tail in and make the data look a bit more normal for statistical methods that assume normal distribution of the data. After log transformation, a linear model is fitted so the observed gene expression in each ROI is a weighted sum of gene expression signatures of each cell type, with an additive baseline as intercept. The weights must be non-negative, and their p-values are calculated. To remove outliers, any gene expression value below a threshold where technical noise dominates is set to that threshold.

In both the prequel and current era, NMF is quite popular among data analysis methods as the factors (cell embeddings) and the gene loadings tend to exhibit block like structures and the values of the basis and the loadings are enforced to be non-negative, corresponding to the non-negative nature of gene expression data and making the results more interpretable. The blocks in the factors may reflect cell types or clusters, and the blocks in gene loadings may reflect cell type marker genes. NMF has been used for cell type deconvolution as well. To address slide-seq (version 1)’s lack of single cell resolution and poor efficiency, NMFreg was developed to reconstruct the expression profile of each spot as a weighted sum of cell type signatures from scRNA-seq (Rodriques et al. 2019). First, scRNA-seq gene count matrix of cell types of interest and cell type annotations is decomposed with NMF, and each factor is assigned to a cell type and one cell type can have multiple factors. Then non-negative least squares is used to compute the weights of the weighted sum of the factors for each spot. As such weights tend not to cleanly assign spots to cell types, perhaps due to the sparsity of scRNA-seq and slide-seq data, the weights are then thresholded. The threshold is the maximum cell loading of cells not assigned to the cell type of interest among in the factors of this cell type. The weights for this cell type is only kept if the \(l_2\) norm of the weight vector for these factors exceed the threshold. Another NMF based method, SPOTlight (Elosua et al. 2020), uses a very similar principle.

7.4.3 Topic modeling

In Chapter 6, we performed topic modeling of LCM related abstracts with the stm package, a generative model of word counts in abstracts from latent topics, and discussed proportion of each topic in each abstract, topic proportions in the entire corpus, and probability of getting each word from each topic. The stm method is built upon a popular and classic topic modeling method, latent Dirichlet allocation (LDA); beyond LDA, stm allows for covariates in portion of topics in each abstract (e.g. discussed in Sections 6.3 and 6.4) and correlation between topics (e.g. discussed in Figure 6.5). In both stm and LDA, a set number of topics must be chosen before hand, and the number can be chosen based on metrics such as how well word counts are predicted in a held out portion of the dataset. LDA has been used in some cell type deconvolution methods, where cell type is analogous to topic and gene is analogous to word.

In STRIDE (D. Sun et al. 2021), the scRNA-seq and the spatial data are assumed to be similar enough to be projected into a shared latent space, inferred from LDA. Here topic isn’t entirely the same as cell type. Contribution of each topic to each cell and the probability of getting each gene from each topic are estimated, and from the former contribution of each topic to each pre-annotated cell type can be summarized. Then the model trained on scRNA-seq data is used to predict contribution of each topic to each spot in the spatial data, which can then be related to contribution of each cell type to each spot.

In contrast, STdeconvolve (B. F. Miller, Atta, et al. 2021) does not use a scRNA-seq reference and the topics are the cell types. First, genes more likely to be informative of cell types are selected, including genes that are over-dispersed and are neither expressed in too few spots nor constitutively expressed in all spots. This is reminiscent of removing stop words (e.g. the, is, to, of, so, and) and rare words in text mining, as performed for the analyses in Chapter 6, as these words are less informative of the topics. Then with the informative genes, LDA is performed to estimate contribution of each cell type to each spot and probability of getting each gene from each cell type.

7.4.4 Other principles without explicit statistical modeling

Some of the packages already mentioned in previous sections have cell type deconvolution functionalities as well. For instance, Seurat’s data transfer based on anchors between datasets can also be used to transfer cell type annotations, and the ad hoc score for the transferred cell types has been used as a qualitative measure of cell type composition in Visium spots (Mantri et al. 2020). Giotto implements 3 methods for qualitative cell type deconvolution: First, a score based on fold change in expression of marker genes in a spot compared to the mean across spots. Second, another score scoring genes for specificity in both scRNA-seq cell types and ST or Visium spots and the sum of the top 100 gene scores is the cell type enrichment score for each spot. For these two methods, p-values are calculated from permutation testing. Third, given a fixed set of cell type marker genes, a hypergeometric test is used to test for enrichment of marker genes among top 5% expressed genes of the spot. In Tangram, the cell mapping matrix from scRNA-seq to ST or Visium can be inferred as the ground truth cell density per spot can be measured from H&E staining. When cells from scRNA-seq are mapped to spots in ST and Visium, the cell type annotations are also mapped.

The graph convolutional neural network (GCN) has been applied to cell type deconvolution, in DSTG (Su and Song 2020). First, scRNA-seq cells are randomly assigned to “spots” of 2 to 8 cells, forming a pseudo-ST dataset. Then the pseudo-ST and real ST data are projected to a CCA space, and a mutual \(k\) nearest neighbor graph is built in this space. After that both the pseudo and real ST data and the graph are fed into a GCN, trained to minimize cross entropy between imputed cell composition and actual cell composition in the pseudo-ST spots. Finally, the trained model is used to predict cell composition in real ST data.

As already mentioned in Section 7.3, some methods exploit spatial autocorrelation in gene expression to map dissociated cells from scRNA-seq to a spatial reference or to impute gene expression in space. Also as well be discussed in Section 7.7, some methods that find spatial regions based on the transcriptome favor spatial autocorrelation in cluster labels, i.e. neighboring spots or cells tend to have the same label. Cell type colocalization is also spatially autocorrelated, but spatial autocorrelation is generally not exploited in cell type deconvolution methods.

7.5 Spatially variable genes

Some genes, such as house keeping genes, are ubiquitously expressed. Such genes, while highly variable at the single cell level, may be interspersed in space so they may not show a spatial trend. Expression of some genes depends on spatial location, which can be due to cell type localization or variation within or independent from cell types. One of the goals of early prequel studies was to identify spatially variable genes, which was done manually, which can be inconsistent and labor intensive. With more quantitative data and data analysis methods, the current era brought identification of spatially variable genes to the next level. Simple methods to identify such genes include dividing the extent of the tissue into a grid and use Fisher’s exact test to test for non-random distribution of transcript counts in the grid, or to run DE between one region — be it a grid cell or a manually annotated histological region — and another region. Some more sophisticated methods have been developed that avoid the potential arbitrariness of grids and manual annotation, taking advantages of increased resolution of spatial transcriptomics. This section reviews these computational methods that identifies genes with expression that depends on spatial locations. Two principles are the most common. One is Gaussian process regression and generalization to discrete distributions with the log mean parameter modeled as Gaussian process. Another centers on Laplacian scores of graphs. There are also some additional methods using other principles.

7.5.1 Gaussian process regression

Gene expression in space can be modeled as a 2D Gaussian process. Spatial dependence of gene expression from any finite collection of locations in space can be modeled with a joint multivariate Gaussian distribution, whose covariance matrix can be defined with a kernel, which is typically defined so spatially closer cells or spots have higher covariance.

SpatialDE (Svensson, Teichmann, and Stegle 2018) is one of the more popular methods to identify spatially variable genes. Spatial gene expression is modeled as a Gaussian process, in which the mean is the mean expression level of the gene, and the covariance matrix has a spatial and non-spatial component. The spatial component uses the Gaussian kernel, in which the covariance decays exponentially with squared distance between cells or spots, with rate of decay controlled by a length scale parameter. In the null model, the gene expression follows a Gaussian distribution without covariance between cells or spots. Then the model likelihood of the fitted full model and the null model are compared with log likelihood ratio test. The log likelihood ratios under null model are asymptotically \(\chi^2\) distributed, and this distribution is used to calculate the p-values of the test. If a gene is found to be significantly spatially variable, then the full model can be fitted with two other kernels, linear and periodic, and compared to the Gaussian kernel with Bayesian Information Criterion (BIC) to discover linear and periodic patterns. As gene expression is discrete and not Gaussian, the data needs to be normalized before applying SpatialDE; even then, data normalization does not make the data Gaussian.

The discrete, non-Gaussian distribution of gene expression is directly modeled by SPARK (S. Sun, Zhu, and Zhou 2020). Gene expression is modeled by a Poisson distribution, with a rate parameter scaled by total transcript count at the spot or cell of interest. The log rate parameter contains a linear model for non-spatial variation in gene expression and can include cell or spot level covariates such as cell type, with non-spatial residuals. The spatial dependence is modeled by a zero mean Gaussian process with either a Gaussian or cosine kernel for the covariance matrix and 5 different length scale parameters are tried for each kernel type, so 10 kernels are tried. The model is fitted with one kernel at a time, with a penalized quasilikelihood algorithm. The p-values are estimated by Satterthwaite method, and the p-values from the 10 kernels are combined with the Cauchy p-value combination rule.

Gene expression data may better be modeled with NB or ZINB, which is done in GPcounts (BinTayyash et al. 2020). The log of the mean parameter of the NB or ZINB, scaled by total transcript count at the cell or spot, is modeled with a Gaussian process with Gaussian kernel for covariance. For ZINB, the dropout probability is related to the NB mean by a Michaelis-Menten equation. For one sample, the null hypothesis a constant model, a Gaussian with fixed mean and no covariance between cells or spots, i.e. gene expression does not vary in space. Spatially variable genes are identified with the log likelihood ratio test as in SpatialDE. For two samples, the null hypothesis is that two samples have the same gene expression pattern, and the alternative hypothesis is that two different Gaussian processes are required to model the two samples. Three models are fitted, one for each sample and another fit with both samples as replicates, and the SpatialDE likelihood ratio test is used to compare the separate models to the shared one. The models are fitted with a sparse approximation of variational Bayesian inference. A similar ZINB model is used in BOOST-GP (Qiwei Li et al. 2021), but instead of using the likelihood ratio test, the model is fully Bayesian. Whether a gene is spatially variable is a parameter in the model that indicates whether a kernel other than white noise (covariance among the locations is the identity matrix) is appropriate for a gene of interest, and the posterior distributions of the parameters are sampled with Markov chain monte carlo (MCMC).

The size of the covariance matrix of the cells or spots grows quadratically with the number of cells or spots. To speed up computation, SMODE aggregates cells or spots into nodes with SOM, reducing the size of the covariance matrix, before proceeding to a SpatialDE-like test (Hao et al. 2021).

7.5.2 Laplacian score

GLISS (Junjie Zhu and Sabatti 2020) has already been mentioned as a method to reconstruct scRNA-seq data in space by projecting scRNA-seq data into a 1 to 3 dimensions that stand for spatial dimensions. The first step of GLISS is to identify spatially variable genes in the spatial reference as landmark genes. In 2005, the Laplacian score was proposed as a method of feature selection, which favors features that preserves the local structure of the data in the feature space and has large variance (He et al. 2020). In GLISS, a spatial neighborhood graph is constructed on the spatial reference; two cells or spots have larger edge weight if they are physically close to each other. By default, the graph is a mutual nearest neighbor graph, in which cells or spots are nodes and an edge connects two nodes if they are mutual \(k\) nearest neighbors. Then for each gene, a Laplacian score is computed using the gene of interest and the graph Laplacian of the spatial neighborhood graph. Genes with low Laplacian scores are chosen as landmark genes, as a low score favors similarity of gene expression in nearby cells or spots and large variance among the spots, which means spatially coherent regions with high and low expression of the gene. The p-value of the gene is computed by permuting expression of the gene of interest among cells and recomputing the score.

The simplical complex is a generalization of the graph that not only includes nodes and edges but also triangles, tetrahedrons, and their higher dimensional generalizations. RayleighSelection implements generalizations of the Laplacian score for simplical complexes for clustering-free DE (Govek, Yamajala, and Camara 2019). The 1-dimensional Laplacian score, a generalization in which gene expression values are attributed to edges rather than nodes, has been used for DE in scRNA-seq data. The nodes here are clusters of cells and two nodes are connected by an edge when they intersect, as in topological data analysis (TDA) (Rizvi et al. 2017). P-values of genes were computed by permutation test, permuting expression of a gene of interest among cells. For spatial data, the spatial neighborhood graph was created as the Vietoris-Rips complex. The 0-dimensional, which is the same as the original Laplacian score, was used to identify spatially variable genes. The graph was also created for cells from pairs of cell types and the Laplacian score, with feature as cell type label, was used to identify cell type colocalization.

7.5.3 Other principles